by Darrin Kite, Matthew Bult, and Bryan Fazzari, Schweitzer Engineering Laboratories, Inc., USA

The efficiency and value of a DA system is generally measured by a few key metrics:

- SAIDI-System Average Interruption Duration Index

- SAIFI-System Average Interruption Frequency Index

- CAIDI-Customer Average Interruption Duration Index

- CMI-Customer Minutes of Interruption

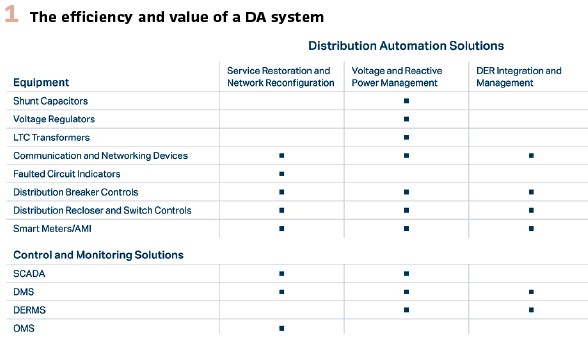

If implemented and operated correctly, DA can improve the service that a utility provides to its customers by quickly locating permanent faults, reconfiguring feeders to restore load to the maximum number of customers during an outage, and controlling voltage levels to reduce power demand or system losses while simultaneously maintaining voltage levels with American National Standards Institute (ANSI)-defined levels. When implementing DA solutions, utility operations must also consider whether the solution allows for equipment to be easily serviced and is able to withstand weather events with the least impact to customers and without adverse consequences.

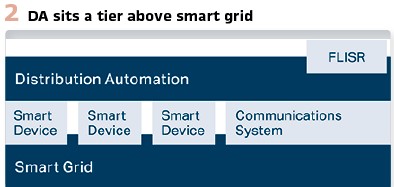

Examples of DA solutions are wide-area control systems such as fault location, isolation, and service restoration (FLISR); wide-area optimization systems such as volt/VAR optimization (VVO); and “flip-flop” style autotransfer schemes coordinating two or more adjacent reclosers or switches. As the name suggests, DA is primarily used on electric distribution systems, but it is no longer unusual to find it on subtransmission systems as well. A clear definition of DA is helpful to put the rest of this article into perspective: distribution automation is any solution that coordinates more than one outside-the-fence device to automate a task that would otherwise be manual. Depending on how a utility defines its asset boundaries, the low-side substation assets are part of a DA scheme. DA could be described as a subset of the smart grid or as a solution space that sits a tier above smart grid—dependent on all the data and communications that smart grid devices, projects, and investments are making available (see Figure 2).

Rapid acceleration of computing, communications, and artificial intelligence innovation cycles have propelled us into a world of technological advancement that few could have predicted. Simultaneously, many other societal objectives, such as electrification, zero-emissions standards, and consumer technology adoptions, are impacting how utilities design and operate the distribution system.

These two macroeconomic trends create a landscape for invention that contains many opportunities and raises many questions that still need to be answered. We cannot discuss the changes impacting our grid without acknowledging that the brunt of the work bringing about solutions to these opportunities and challenges falls on a willing coalition of operators, engineers, and vendors working together to design our future.

While DA technology is in a constant state of change, some things remain constant. The financial responsibility of each power producer, energy supplier, and grid operator remains the same: maintain superior reliability while incurring the lowest annual operational expenses and, if applicable, securing a solid return on investment to shareholders.

Centralized Vs. Edge

Whether on the pole-top, pole-bottom, substation, front-end processor, or SCADA server, if sufficient computing resources can be found, then a DA scheme has been implemented in that location. The fact that there are many different DA solutions today can easily be attributed to the ingenuity of engineers and the diversity of today’s distribution systems.

As with most engineering problems, each location has its own tradeoffs. The closer to the edge a solution gets, the less dependent on communications bandwidth it is and the less data it tends to need. However, edge computing generally has far less performance capability than centralized computing, and a solution running at the edge generally has limited visibility of the system as a whole.

Conversely, centralized solutions have wider system visibility but rely more on larger quantities of data traversing communications networks of varying quality and reliability and are often more complex to implement and troubleshoot as a result.

Our industry is seeing the development of a new DA design pattern, modeled after the SCADA design pattern that precedes it: a tiered approach. This tiered approach blends centralized and edge into a single coordinated solution. One attractive benefit to this pattern is the ability to locate a solution to maximize its simplicity and its performance. Not all solutions require system visibility for effective operation. DA at the edge must understand holistic commands sent from an upper-tier system to manage a wide area, but managing small feeder or substation segments could be completely up to the edge device. The tiered design pattern supports the possibility of coordinating arrays of feeders managed as independent microgrids, supporting an expected proliferation of distributed energy resources (DERs).

Monolithic Architecture and the Emergence of Modular Alternatives

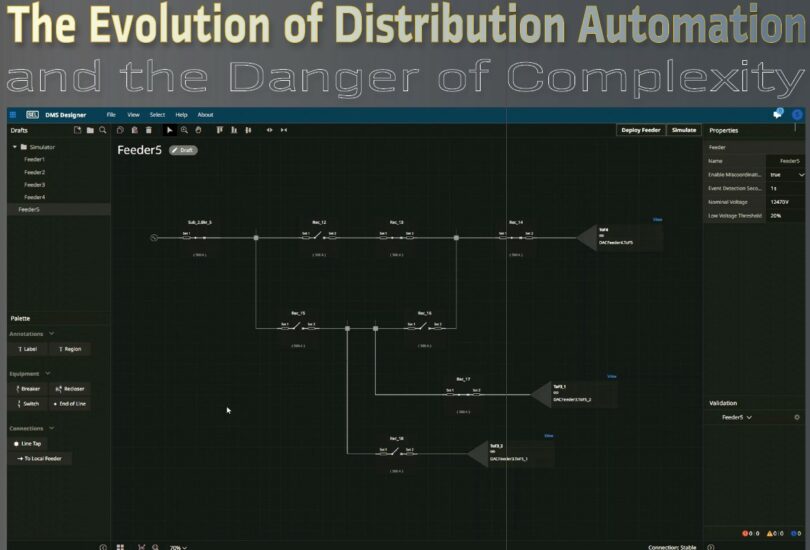

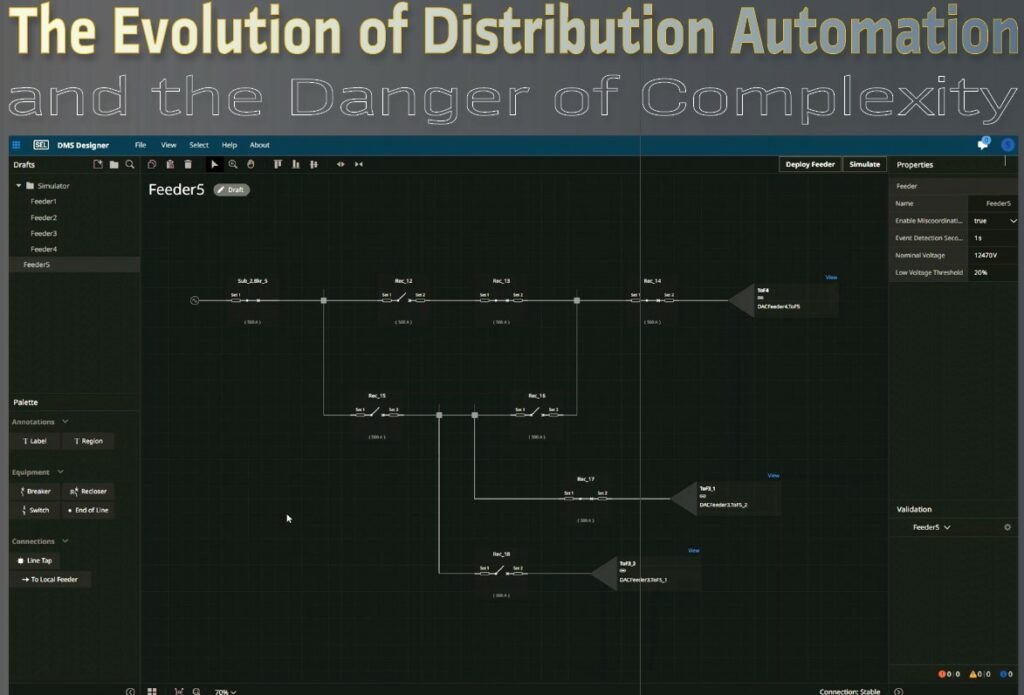

DA has most commonly been implemented in three different ways: in the device (typically peer to peer), regionally (in an automation controller, often located in the substation or communications front end), and centralized. While the pros and cons of each is worth its own focused examination, this section focuses instead on disruption in centralized DA architectures that may change the way utilities purchase and operate these types of systems.

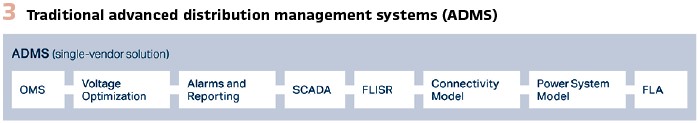

DA solutions running in a centralized fashion are usually part of a monolithic software package provided by a single vendor. Traditionally referred to as advanced distribution management systems (ADMSs), these solutions can perform almost every function needed to operate and understand the grid, including SCADA, outage management system (OMS) functions, FLISR, VVO, distributed energy management system (DERMS) functions, fault location analysis (FLA), historian functions, and more. These solutions perform their job well and represent a singular vision from the selected vendor. They have the high-level advantage of integrating all functions together because each is from the same vendor and often includes comprehensive vendor support and management (see Figure 3). Standardization and interoperability are not architectural requirements. This advantage, however, also creates a problem for utilities: centralized systems are significant capital software purchases often not designed for interoperability, effectively creating a vendor-lock situation that makes it difficult to incorporate innovative functionality from other vendors if desired. Additionally, they can introduce business and operations procedure changes that can impact the safe and effective operation of the grid.

With the added complexity of grid operation, procedural changes need to be fluid, which means the software must be flexible enough to meet the utility’s current and future requirements.

The concept of adaptable modular architectures for grid operations has become a frequent topic at industry conferences and with solution manufacturers (see Figure 4). Some of these architectures are in service as of the time of this writing. An adaptable modular architecture creates an ecosystem for a marketplace of innovation from a multitude of vendors, each focused on solving specific challenges that the grid of the future has—challenges such as DER penetration, microgrid participation and dispatch, weather-driven variability, staffing or organization changes, technical limitations, and varying regional needs.

This coordinated operation of purpose-focused functionality provides the electric power industry with the opportunity to focus on simplification—reducing the “number of clicks” it takes to execute a task or configure a system. Much of this simplification is dependent on two factors: how well a given vendor understands the user’s specific problem, and how well the solution integrates within its ecosystem. The first problem can be solved by enabling different vendors to deliver functionality according to their area of expertise. The second can be solved by an industry focus on standardization of interfaces, which enables multiple vendor solutions to interoperate without re-architecting the information back end or importing system data into each solution with the risk of having multiple versions of the as-operated system.

A properly built system would have the ability to leverage the utility’s primary system viewer, whether that is an OMS, ADMS, DMS, EMS, etc., and the modular subsystem focuses on a specific task or functionality. Once established, this architecture could allow users to obtain the best software available for any given functionality (as opposed to using whatever the selected ADMS provides). It allows for capital assets to continue to be depreciated because its overall useability is being maintained while simultaneously allowing the utility to gain a capital asset that performs a new and/or enhanced function. There are many approaches to the financial aspect of software and support, dependent on the type of utility. However, many questions are always present: does the increased cost come with increased efficiency, a decrease in operational expenses, a simplification of existing systems or processes, and a solution to a real problem?

Possible Impact of New DA Architectures

The previously discussed changes in DA solutions create a change in end-user ownership. In a tiered operating model with an adaptable modular architecture, end users can configure a system that meets specific needs, which may enhance other business practices.

Whether a utility wishes to develop in-house system integration to support the publication of near-real-time data or the sharing and updating of impedance models and settings between applications, etc., or whether they prefer to operate using smaller, more loosely integrated systems, which may fit smaller municipals or co-ops better—this approach should support it all. Any vendor selected to provide the end user’s user interfaces should be able to support data from any source (as selected by the utility). Without that capability, utilities must use a system that may provide limited integration with data sources and functionality that originates from the single vendor. This will stifle system and data ownership for the end user.

Utility business processes can also be significantly impacted by grid operations software. Operations software like DA must be flexible enough to support those processes desired by the utility. A common mistake operational software designers make is assuming certain organizational structures, data ownership models, and field-focused procedures. A utility may prefer that all modeling activity be owned by a geographic information system (GIS) team. They may prefer a specific engineering group to own the impedance model subset. Yet another group may own data point maps for substation and field area assets. These should all be acceptable and supported. Assuming one over the other can create a difficult experience for the end user. The future distribution system will require faster adoption of new technology and will be able to incorporate or create solutions flexible enough to enable a utility to manage their settings, data, and operations according to processes and business organization that work for them.

Complexity: The Enemy of Trust

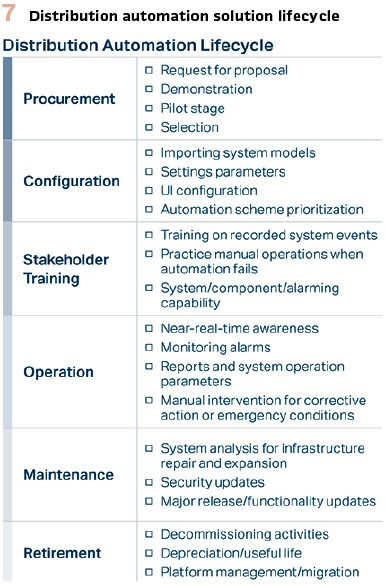

One of the biggest risks posed to DA as automation solutions proliferate and scale, is complexity. The reason is simple: complex systems are hard to understand, and we tend not to trust systems we don’t understand. Complexity makes change, operations, and maintenance harder and requires multiple stakeholders to be aware of the entire system operation. When evaluating or using DA solutions, it’s important that the vendor and the utility maintain constant communication regarding how the product is used throughout its entire lifecycle. The constant question should be: “Can this process or functionality be simplified?” This is especially true when multiple DA functionalities are being coordinated and ranked on priority of operation. To add to the complexity, priorities must shift based on time-of-year energy usage and extreme weather events. It’s the industry’s responsibility to constantly drive toward maximum simplicity so that infrastructure investments are fully utilized and solutions continue to operate in the future. Figure 7 lists some important considerations and questions for each step in a DA solution’s lifecycle.

Procurement and Evaluation

It is common for utilities to approach change—especially change in the form of wide-area control systems, such as those found within the DA solution space—with caution. This approach frequently takes the form of a pilot project. Pilot projects are generally designed to be as small and simple as possible while still providing enough realism and variability to fully evaluate the performance and features of one or more solutions, often from more than one vendor. Pilots are often broken into multiple stages of evaluation, including test bench testing, lab testing, training-yard testing, and eventually live testing and long-term evaluation. Utility engineers must ensure that the vendor clearly communicates what it takes to achieve a successful pilot, starting with procurement. What must the utility purchase to be able to run the solution in a lab? Is the cost of a pilot tied to its scale or its duration (or both)? Is it possible to do without primary equipment? Does the vendor provide any add-on capabilities to provide additional realism?

Is any data needed from GIS, SCADA, or any other system?

Configuration

Visit any industry trade show or schedule a demonstration of a DA solution such as FLISR or VVO, and often on display is a curated solution operating at peak performance.

While learning how the solution operates once in service is important, equally important is how complex the solution is to configure and how intuitive is it to add to the system and maintain its use over time. Suboptimal workflows that result in many repetitive configuration tasks and settings spread across multiple services contributes to the complexity when adding to the system and when troubleshooting operations after something goes wrong. Configuration complexity can quickly grow as the pilot project scales into a true deployment. Another consideration is how easily the solution integrates with other enterprise systems and what formats or protocols are supported. The practical consideration, of course, is cost. Complexity in the configuration and integration will add to the upfront cost and time to implement the system, but also the ability of the system to grow and change over time. A great way to make sure the utility and vendor are on the same page regarding configuration complexity is to ask the vendor to demonstrate the solution in a conference room setting or have a “back to basics” conversation using the whiteboard.

Testing and Training

Recall the definition of DA provided previously in this article: DA solutions coordinate more than one outside-the-fence device to achieve a function that would otherwise be manual. The primary reason for de-activation in the DA world is lack of trust. Trust requires achieving enough familiarity with a product or solution that its operation can be predicted and understood. This familiarity must exist within several different groups at a utility, including distribution engineering, distribution system operators, and line crews. Vendors often provide a training environment, a simulation environment, educational courses, and more to ensure that the level of trust the utility has with the solution permits the solution to operate in its intended automated fashion. The process for onboarding new engineers, operators, and line crews and the subsequent recurring training adds to the operational cost of the solution over time. Questions to keep in the forefront are:

1. What tools does the solution provide that can be integrated into internal training?

2. What additional tools are needed to maximize the impact of that training?

3. Can training tools we already have be leveraged with this system?

Operation

It is critical that a DA system provides an easy-to-understand view of the system automation so that operators and engineers can quickly digest information and make optimal decisions when field crews are conducting work or when conditions are abnormal and require corrective action. Ideally, much of the automation is virtually hands-off and should operate as planned. The real test of the system is in how much work is involved when the system does not operate as expected. What if the FLISR system didn’t restore as much load as expected or the system did not account for load that appeared when a generation resource is lost? What if the volt/VAR system caused unacceptably low voltages when its conservation voltage reduction (CVR) mode was activated? Retrieving reports and tracing the data that went into the automated decision must be simple for all stakeholders. Crucially, those reports must be easy to analyze and understand so that root cause can be quickly determined, and corrective action taken.

Updates and Maintenance

The distribution system is constantly changing. New reclosers, switches, and conductors are added. Expanding communications coverage makes new circuit additions in DA solutions possible. For any given DA product, consider what is involved when real-world changes in the distribution system create a need to change DA settings. How much of the distribution system is impacted, and how long does it take to make those changes? These workflows must be well-defined and simple because the alternative is a mistake in the form of a settings error that could impact the performance of the DA system.

Another facet to updating DA systems is cybersecurity. Recent geopolitical events have highlighted the need to conduct frequent evaluations of any software or firmware system deployed in the electric power system. Systems must be engineered to be easily updated to address security vulnerabilities without significant intervention from the system provider. Security must be engineered into the system from the beginning, and as has been demonstrated, this is no longer optional.

Conclusion

The distribution system is experiencing significant changes. The proliferation of DERs, electric vehicles, and the addition of devices capable of providing a great deal of data will continue. DA systems must continue to evolve to cope with these changes, make more data actionable faster, and use these new connected assets to maximum effect. This article has discussed some of that evolution. There is a great deal of opportunity for innovation when our industry begins treating distribution feeders as microgrids, from updating field devices and FLISR systems to supporting directional fault detection (and accurate location) to incorporating inverters for reactive power and frequency support. If we expect DA to scale, as we know it must, it is the responsibility of all of us to create and maintain trustworthy solutions laser-focused on simplicity.

Biographies:

Darrin Kite is a senior engineering manager at Schweitzer Engineering Laboratories, Inc. (SEL), where he is responsible for leading the development of SEL automation products and SEL distribution automation technology. During his career, he has worked as a distribution management system administrator and a utility data analyst for demand response and renewable generation integration programs. He joined Research and Development at SEL in 2012, leading the development and application of various automation products, including the SEL Real-Time Automation Controller and the Blueframe® application platform. Darrin earned a BS in renewable energy engineering from Oregon Tech and a graduate certificate in digital product management from Boston University. He is a Certified Information Systems Security Professional and a member of IEEE.

Matthew Bult is a senior product manager at Schweitzer Engineering Laboratories, Inc. (SEL), where he is responsible for Distribution Management System (DMS) software. He has worked in Research and Development at SEL since 2021, aiding the development, implementation, and support of DMS software components. Matt earned a BS in electrical engineering from South Dakota State University and after college worked for Evergy in both Kansas and Missouri (formerly Westar Energy and KCP&L). At Evergy, Matt held various roles in generation, IT, and distribution—focusing on technology, operations, and strategic planning.

Bryan Fazzari is vice president of Marketing and a principal engineer at Schweitzer Engineering Laboratories, Inc. (SEL). A patented inventor, Bryan has previous experience as an automation product owner and engineering manager. He has guided the design, development, marketing, and education strategies of numerous next-generation distribution automation solutions, including fault location, isolation, and service restoration (FLISR); heuristic volt/VAR control; photovoltaic (PV) plant control; industrial high-speed load shedding; and wide-area data collection, visualization, and monitoring systems. Bryan is a member of IEEE and PES.