How standards improve substation cybersecurity

by Fred Steinhauser and Andreas Klien, OMICRON electronics, GmbH, Austria

How the security of our substations can be improved: Due to their large number and their often-insufficient protection mechanisms, substations represent a major attack vector on the power grid. Within the last few years, a lot of utilities are using state of the art technology and standards to improve the cybersecurity of their substations greatly.

The first idea of improving the cybersecurity always concerns the “hardening” of the system in terms of how difficult it is for attackers to penetrate the barriers – a very technical view. However, there are more aspects of cybersecurity to consider.

Cybersecurity always depends on the interaction of people, processes and technology. It is interesting that (technology) standards can help in all three areas. To look at substation cybersecurity in a holistic way, we recommend using the security functions Identify, Protect, Detect, Respond, and Recover, as introduced by the Cybersecurity Framework of the National Institute of Standards and Technology (NIST). With the CSF, NIST established an international standard for improving the cybersecurity of critical infrastructure – the first standard to be mentioned in this article, with the most recent version 1.1 published 2018. Many national security regulations and guidelines refer to the NIST CSF, including some EU countries and Switzerland. The North American Electric Reliability Council (NERC), CIPC Control Systems Security Working Group also published a mapping of the NIST Cybersecurity Framework to the NERC CIP. Improving substation security therefore starts with identifying your risk level for cyber-attacks (Identify). This includes identifying the assets you want to protect and the attack paths (attack vectors) which could be used to target them. After that, measures to protect against the attack vectors, starting with the biggest risk, can be developed (Protect). If an attacker breaks through one of these measures, this breach must be detected (Detect) and acted upon as quickly as possible (Respond), so that the normal state of the system can be restored quickly (Recover). In the remainder of this article, we focus on standards to aid in these five functions in substation cybersecurity.

What do we Expect from Standards?

There are many definitions of what standards are and what they are good for, but for the end users’ standards mainly serve two fundamental purposes: to assure a certain quality of a service, procedure or product, and to deliver convenience.

The quality aspect is served by demanding a certain level of sophistication for the implementation of products or services or imposing best practices as required procedures. An outstanding example for our electrotechnical community is the internationally recognized five safety rules for working on electrical installations, as they are written down e.g. in EN 50110. Often, this does not affect how products and services are implemented, but what characteristic properties or specs have to be matched. Striking examples are the main voltages and frequencies of our electrical power systems.

The technical aspect behind the convenience is interoperability. Interoperability means that we can use different products or services together because they follow common conventions. This results in a tremendous saving of efforts, because the interfaces for combining products and services do not need to be established over and over again for each case. Bottom line, this comes with limiting choices. Only if there are not too many options, the probability of finding a match is high enough to provide a benefit. Thus, a good standard firmly narrows down the options instead of declaring too many variants as allowed.

Therefore, the standards must pay attention to the fundamental requirements of the application in the first place and must not squint too much at already existing implementations that might possibly fall into their scope. There are examples of standards that include existing technical variants and ennoble them with the label of being compliant to the standard. One example for this is the “Real-Time-Ethernet standard” IEC 61784-2, where each already existing variant in the market was included as a profile. Such a document does not deliver essential added value for the users, since the burden of making a choice and the probability for interoperability issues is not reduced.

Kinds of Standards

A standard is a definition of normative impact. Something the industry and the users follow because doing so delivers a benefit. There are different kinds of documents and publications that have such a normative impact.

First come the standards which are published by the established standardization organizations (ISO, CEN, CENELEC, ETSI, ANSI, IEC, IEEE, ITU…). Let us call these standards “official standards.” With most of these organizations, the genuine purpose is exactly to produce standards and then earn money from it. There are well defined structures and procedures in place with the aim to properly cover the fields of expertise. Most international standardization organizations have a global network of national mirror committees to involve experts from all continents. Of course, standards published from those organizations are expected to have a high normative impact.

But there are also other normative references from outside the official standardization organizations and these may be just as good as official standards. Often, an implementation of a certain service or product is so good and exemplary, that it becomes the guideline for a whole technical branch or industry. Inventing something competing simply appears pointless and all other implementations strive to be interoperable with the role model. Such a standardization by consensus within an industry establishes an “industry standard.” Their normative impact is simply a fact, so they are also called “de-facto standards.” One example for this is the Hewlett-Packard Interface Bus (HP-IB) or also called General Purpose Interface Bus (GPIB). Invented in the 1960s at Hewlett-Packard for interconnecting test and measurement devices, it did fit the purpose so well that it was widely adopted in the related industry and is still in use today. Later on, it even evolved into the “real” standards IEEE 1488 and IEC 625.

How would an implementation become such a de-facto standard? There are mainly two options: be the first and do it well or use a unique principle. To go further towards the topic of cybersecurity, let’s look at encryption standards that form a great share of the foundations of modern cybersecurity: The Diffie Hellmann Merkle (DHM) key exchange and the Rivest Shamir Adleman (RSA) cryptosystem. These public key systems are based on a thorough understanding of number theory and an ingenious comprehension of how this could be applied in cryptology. Advances in mathematics and computer science made it possible to use these findings for cryptological purposes in the 1970s. Besides choosing different parameters, the concept was based on a few fundamental mathematical principles. How could you do it differently? Thus, these methods established themselves as de-facto standards.

Although it is not a preferred approach to tie standardization to the technical options at a given time in the first place, such developments also have to be seen in this context. Newly arising knowledge may make established methods obsolete. The DHM key exchange is already in use with an alternate mathematical principle, the elliptic curves (ECDH). The assumed one-way property of the involved mathematical transformations may be proven wrong in the future or quantum computers may simply break it with a brute force attack. But then, new methods like quantum encryption will match the advances in code breaking.

Other Normative Documents

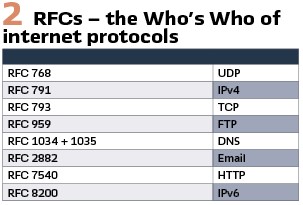

There are several other kinds of publications with normative impact, but when it comes to communications and cybersecurity, there is one other kind to mention in particular: The Requests for Comments (RFCs). When introduced in the late 1960s, these were documents put out for discussion. Today, released RFCs are reviewed technical specifications which are considered to be standards. The process is facilitated by the Internet Society (ISOC) and its associated body, the Internet Engineering Task Force (IETF). The RFCs have an overwhelming normative impact and we will come across them all the time. Just to outline their importance, some prominent examples are listed in Figure 2.

IEC 61850

When it comes to modern PAC systems, there is no way around IEC 61850. In addition to defining the protocols for transmitting the data to operate and supervise the PAC system itself, the standard also takes care of several surrounding tasks. One of these is system configuration, which led to the creation of the System Configuration Language (SCL), which showed to have many beneficial side effects besides configuring the devices in the PAC system. This is a real achievement of IEC 61850, most other communication standards miss a matching component.

IEC 61850 Aiding in Cyber Risk Management

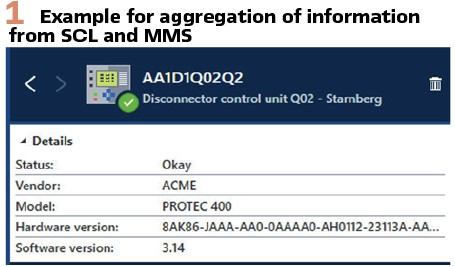

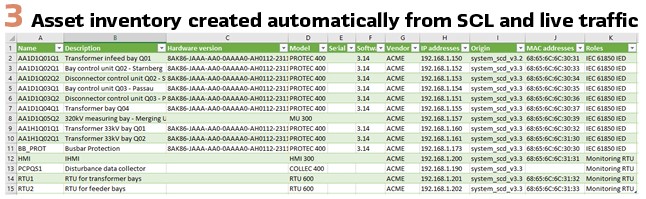

At the end of the IEC 61850 engineering process, regardless if the process was top-down or bottom-up, there is an SCD file documenting the instantiated data models of all IEDs. Meanwhile almost all engineering tool vendors are putting information about the type and model of each IED in an interoperable way into the file. When fully utilized, the data models in the IEDs and the system configuration data complement an inventory in an asset management database. The name plate data in the high-level logical nodes (LPHD, LLN0) hold the model information, serial number, hardware revision, and software revision. When properly populated, not only the configuration files will contain these data for offline use.

The actual data can be interrogated from the IEDs, so a non-documented firmware upgrade would be reflected in the software revision obtained online through MMS. This fits perfectly into the scope of IEC 62443-3-3 SR7.8, where it requires a current list of installed components and their properties. Similar wording can also be found in ISO 270001, Appendix A.8.1.1. These data, together with other parameters of the communication infrastructure and reports about actual exploits, can then be used in a vulnerability assessment. A patch plan can result from this, as well as an “all good” for IEDs that are not exposed to threats. With an accurate asset inventory, risk management can help to save a lot of effort for security patching of IEDs by only applying the patches which reduce the risk significantly and where there are open attack vectors. With IEC 61850 and modern testing and security tools, such an asset inventory can be created with little effort. (Figures 1/3).

IEC 61850 Supporting Intrusion Detection

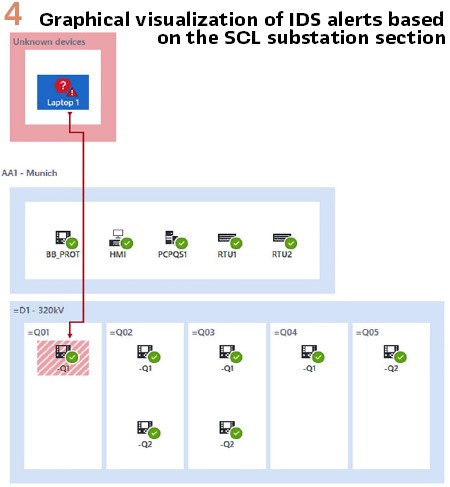

By not only containing the configured data models and communication services, but also information about the substations structure and assets, the roles of many IEDs, the configured protocols and allowed actions can be identified automatically. This information can be used to automatically configure Intrusion Detection Systems on the station and process bus. Modern IDS can import the SCD file of a substation and automatically derive all expected communication for each IED from that.

The IDS can then compare the packets on the network against data models described in the SCL file. The IDS will also know which variables control critical assets and should therefore only be operated by certain other devices. In non-IEC 61850 substations, a “baseline” of allowed traffic needs to be established first by monitoring the traffic for a few weeks. If the IDS can use the IEC 61850 SCL file as baseline, it will know all behavior immediately. More and more utilities use a top-down engineering approach, where the final SCD file also contains a “substation” section.

This allows tools to automatically create graphical overviews of the substation structure. Further, this allows the creation of security systems with user interfaces that are very close to the single-line diagram of the substation. And finally, this allows security officers and protection and control engineers to work together in analyzing the cause of alerts. This collaboration is essential to speed up response processes after security incidents. (Figure 4).

Secure Communication and IEC 61850

Sometimes the GOOSE status and sequence number mechanism is described as a security mechanism, which is not correct. The mechanism may help to detect GOOSE duplicates on the network, but if the messages are faked properly, the original and fake GOOSE messages cannot be distinguished from each other. This means that the receiving IED will act on the message arriving first, but it may notice that an identical GOOSE message follows after that. As a counter measure another standard can help: IEC 62351. The most important feature with “secure GOOSE” and “secure MMS” introduced in IEC 62351 parts 6 and 4 is authentication. Using authentication codes appended to the GOOSE messages, the faked GOOSE messages can be detected and ignored by the receiver. These authentication measures rely on cryptographic keys, which need to be enrolled, created, distributed, installed and stored in IEDs, and eventually be removed, as described in IEC 62351-9, which in turn references the standard X.509 by the International Telecommunications Union, Study Group 17 and several IETF RFCs, such as RFC 8894, RFC 4210, RFC 7030, RFC 5280, etc. The first key distribution systems conformant to IEC 62351 are available and we expect that they find their place in the standard design for modern substations in the next few years.

How to Secure Proprietary Communication

There are several misconceptions about the security level of different protocols. Some argue that proprietary protocols are more secure than “open protocols,” based on TCP and IP. First of all, not the protocols are insecure by design (leaving out encryption and confidentiality for now), it is the products which have bugs (vulnerabilities) that can be exploited. The problem with proprietary protocols is that the design cannot be reviewed by third parties and without interoperability requirements, many bugs remain in the products without anybody noticing them. Proprietary protocols also tend to be implemented with less care since the developers assume that only “friendly” communication partners of the same vendor are at the other end of the communication channel. Generally speaking, attackers usually have an easier job with proprietary protocols than with open standards.

Instead of being more secure than an openly documented and widely used protocol, a legacy protocol is suspicious per se. Deep packet inspection, the scrutinization of the content of a packet down to the last bit, is only possible with well-known protocols, i.e., those which are well documented and tested.

This goes together well with Kerckhoff’s principle or Shannon’s maxim: the enemy knows your system anyway. Every measure that relies on being a secret will become disclosed and ineffective sooner or later. A system is not secure because it is a secret how it works, but because it is hard to break even if the attacker knows how it works.

There must be only one thing required to be kept confidential: the credentials to access the system, that’s what we call the “keys”. No cybersecurity measure that was not openly documented and exposed to the scrutiny of independent experts can be considered secure at all. Or in other words: never trust anybody who claims to have invented their own security method.

As we can see with IEC 61850 and IEC 62351, the advantage of modern, open protocols is that you can also extend them with modern state-of-the-art security mechanisms, such as Transport Layer Security (TLS), formerly known as Secure Sockets Layer (SSL) – another standard by the IETF. The detailed knowledge about standardized protocols also allows identification of vulnerable protocols, the recognition of outdated versions of e.g. TLS is such a case.

Security Standards

TLS is an example of a standard that specifically deals with data communication security. TLS is again described in an RFC (RFC 5246) and used by most websites today. It is so good that secret services can just dump the encrypted data and hope to find a way to crack it somewhen in the future. The DHM key exchange and the RSA cryptosystem are other examples for security standards and have already been mentioned above. But the standards ecosystem has more to offer for cybersecure protection, automation, and control systems.

Another security concept is the Role Based Access (RBAC), also laid out in an RFC. RFC 2906 is about “AAA,” that means “Authentication, Authorization and Accounting.” Authentication is proving the identity of the entity requesting access. Authorization is assigning roles to authenticated entities, and the roles are connected to certain rights. And accounting is keeping records of what is going on.

RADIUS is one implementation of the RBAC concept. There are several dozens of RFCs issued since 1997 defining all aspects of RADIUS.

While a RADIUS server could by itself hold the credentials of users, this task is typically delegated to a directory server like ActiveDirectory from Microsoft, which uses concepts from X.500. X.500 is a heavyweight protocol that originally relied on an OSI stack and was later modified to use TCP/IP. The Lightweight Directory Access Protocol (LDAP, RFC 4511) proved itself as a leaner alternative and is widely used for this very task. Another protocol in this orbit is Kerberos (RFC 4120), which is also sometimes combined with LDAP, but Microsoft uses a modified version of Kerberos in ActiveDirectory. There are several other reasons why there is currently no alternative to ActiveDirectory in Windows-based networks. In the substation domain, however, alternatives like OpenLDAP could be investigated.

IEC 62351-8 again describes the use of RBAC for power utility communication. It refers to LDAP, X.509, and RADIUS and how these established methods are to be applied in this application.

The beauty of RBAC lies in the fact that users only need to identify themselves to the authenticator. All further rights for accessing individual assets are managed in the background. For the convenience of the user, he does not need to know (and to remember) the individual credentials for the individual assets. To the benefit of security, the user also does not know these credentials in the first place. By not knowing them, a user can never leak credentials and in the case of leaving the organization he cannot take such credentials with him. There will be no need to change such credentials because of changes in the personnel. All what is needed is to purge the user from the directory, so he cannot log on anymore.

An analogous method for authenticating devices to a network infrastructure, the Extensible Authentication Protocol (EAP) as described in IEEE 802.1X is often used.

So, there is no lack of proposed and described methods to provide cybersecurity, just a lack of properly applied implementations.

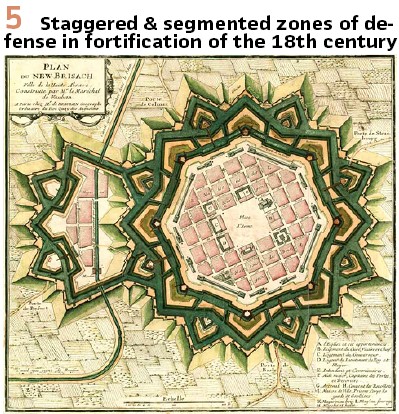

Defense in Depth

For properly defending critical assets, a mix of measures will be required. The fortification architects of the 17th and 18th century already sketched some “standard topologies” for fortified places (e.g. Vauban: Traité de l’attaque et de la defense des places). Common pattern for threats were identified and matching countermeasures were proposed. Typically, this was a staged approach of outer and inner defense levels, conceptually more or less anticipating what we call “defense in depth” for cybersecurity today. (Figure 5).

Defense in depth is also one of the two core principles in the IEC 62443 standard series. Applied to the cybersecurity domain, this principle implies that you need multiple layers of defense instead of just one hard shell. Also important is that you also need measures for detecting if one of the layers has been breached in order to be able to respond before the damage is done – analogous to watch towers in forts. The other core principle in IEC 62443 is structuring the network into “zones and conduits”. The different network zones have a different criticality assigned, as the corporate IT network is less critical than the management network in the substation which in turn is less critical than the process bus. By explicitly taking care of the conduits between the zones, we are aware of the interconnections of the networks and we can secure them.

Integrating Cybersecurity Components into IT Systems

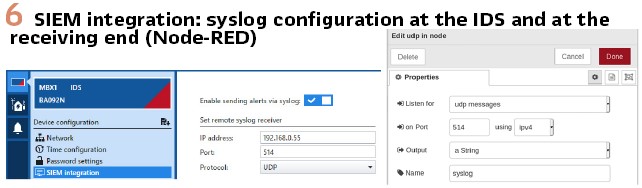

Components in a cybersecurity infrastructure must report their finding to a supervising instance, where events are then noticed, and related actions are initiated. For cybersecurity, such a supervising system is mostly called Security Information and Event Management (SIEM). Sometimes a SIEM is already present for IT purposes and the OT systems must report to it as well. Some utilities prefer a separate SIEM for their OT environment. So SIEM integration will be required for firewalls and intrusion detection systems in substations. This is again facilitated by standardized protocols, in particular by the syslog protocol (RFC 5424). And again, there is a standardized way of securing this messaging protocol with TLS (RCF 5425: Transport Layer Security (TLS) Transport Mapping for Syslog). For the message content itself, other common conventions serve as de-facto standards, such as the Common Event Format (CEF) proposed by Micro Focus ArcSight. (Figure 6).

Conclusion: Using standardized protocols and methods greatly supports the cybersecurity in power utility communication networks.

The cybersecurity ecosystem is densely covered by standards, which, when properly applied, reduce the attack surface, ease configuration, provide much insight and analysis, and allow the integration in an overall cybersecurity infrastructure of an organization. So, whatever is to be done in terms of cybersecurity will not be in an unregulated vacuum on unexplored ground, but well embedded in an ecosystem of standards.

Biographies:

Fred Steinhauser studied Electrical Engineering at the Vienna University of Technology, where he obtained his diploma in 1986 and received a Dr. of Technical Sciences in 1991. He joined OMICRON and worked on several aspects of testing power system protection. Since 2000 he worked as a product manager with a focus on power utility communication. Since 2014 he is active within the Power Utility Communication business of OMICRON, focusing on Digital Substations and serving as an IEC 61850 expert. Fred is a member of WG10 in the TC57 of the IEC and contributes to IEC 61850. He is one of the main authors of the UCA Implementation Guideline for Sampled Values (9-2LE). Within TC95, he contributes to IEC 61850 related topics. As a member of CIGRÉ he is active within the scope of SC D2 and SC B5. He also contributed to the synchrophasor standard IEEE C37.118.

Andreas Klien received the M.Sc. degree in Computer Engineering at the Vienna University of Technology. He joined OMICRON in 2005, working with IEC 61850 since then. Since 2018, Andreas has been responsible for the Power Utility Communication business of OMICRON. His fields of experience are substation communication, SCADA, and power systems cyber security. As a member of the WG10 in TC57 of the IEC he is participating in the development of the IEC 61850 standard series.